So, is Bun a full-fledged substitute for Node.js or is it just another JavaScript fling?

These days, Node.js is one of the most popular technologies for web development and, in fact, a leading environment for executing JavaScript on a server. As technology evolves, new trends appear in web development and in light of the criticism of Node.js, even by its creator Ryan Dahl, new alternative server-side JavaScript runtime environments like Deno or Bun are emerging.

The Bun runtime environment, due to its unique features and advantages, is positioned by its creators not merely as a worthy but, at times, an indisputable alternative to Node.js. The frequency with which Bun is mentioned in most tech-related resources hints at a revolution unfolding in the world of server-side JavaScript. But, is this truly the case or are we witnessing a minor local uprising? In this article, I will strive to answer these questions.

What is a JavaScript runtime environment?

For a long time, the only place JavaScript could be used was in web browsers. In 2009, Ryan Dahl introduced a new technology to the world called Node.js.

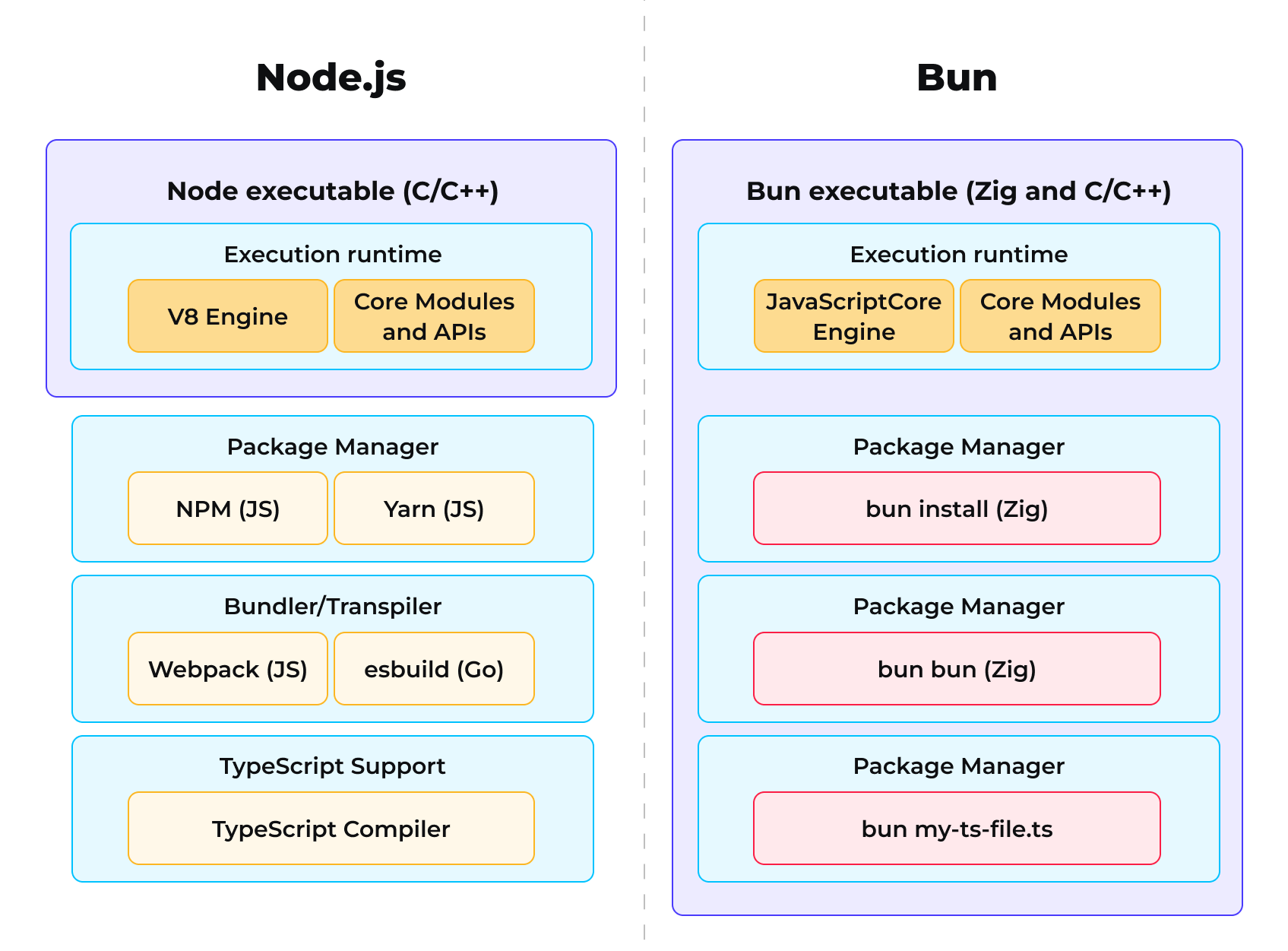

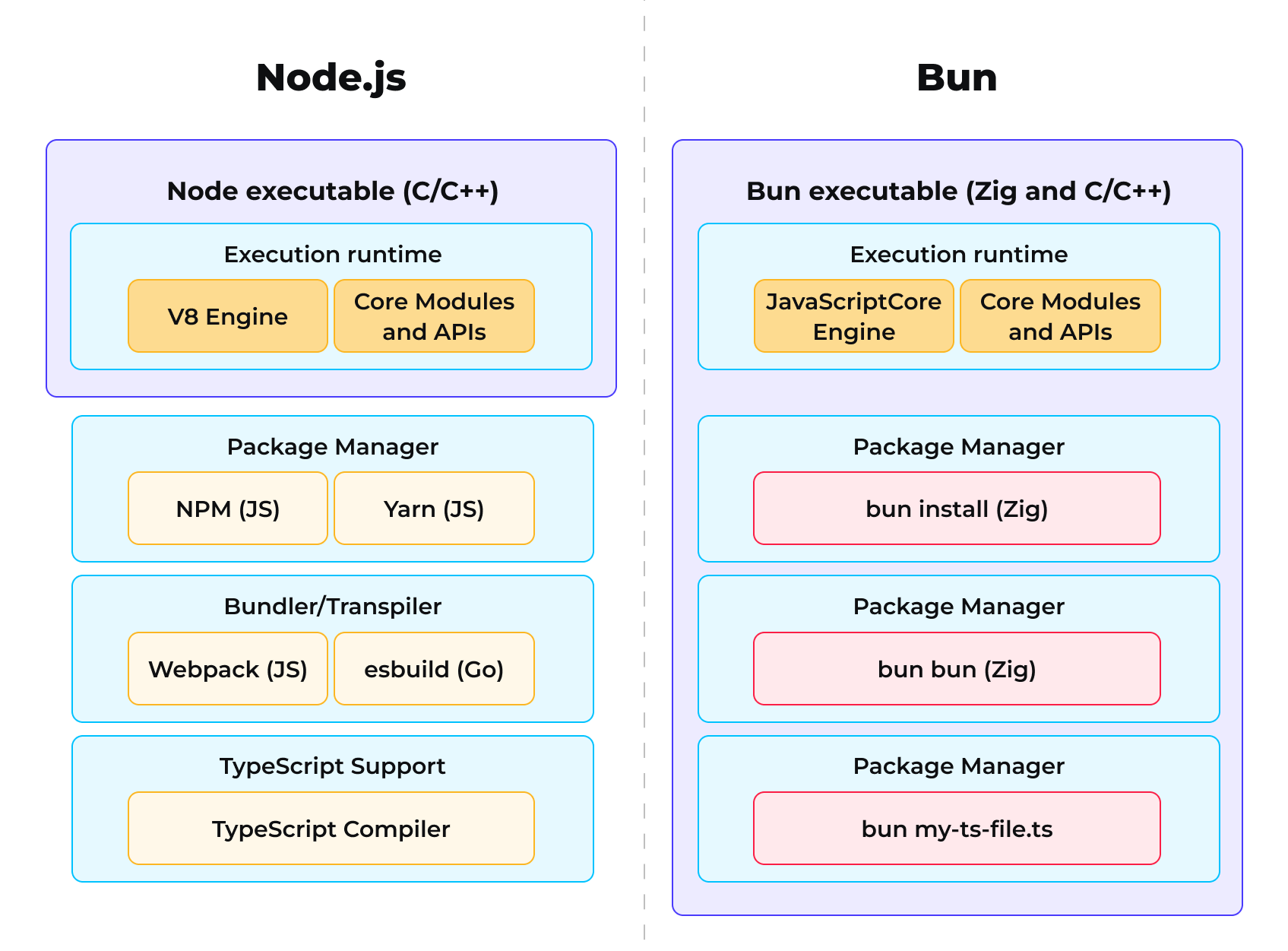

Node.js was built around the V8 engine, the same one used in the Google Chrome browser, along with a set of libraries and tools to enable asynchronous I/O architecture and interaction with the operating system [server]. The combination of these components formed a runtime environment.

As time passed, both the strengths and weaknesses of the platform became evident, which Ryan Dahl himself highlighted in his well-known presentation, “10 Things I Regret about Node.js.“

What’s Bun’s role in all of this?

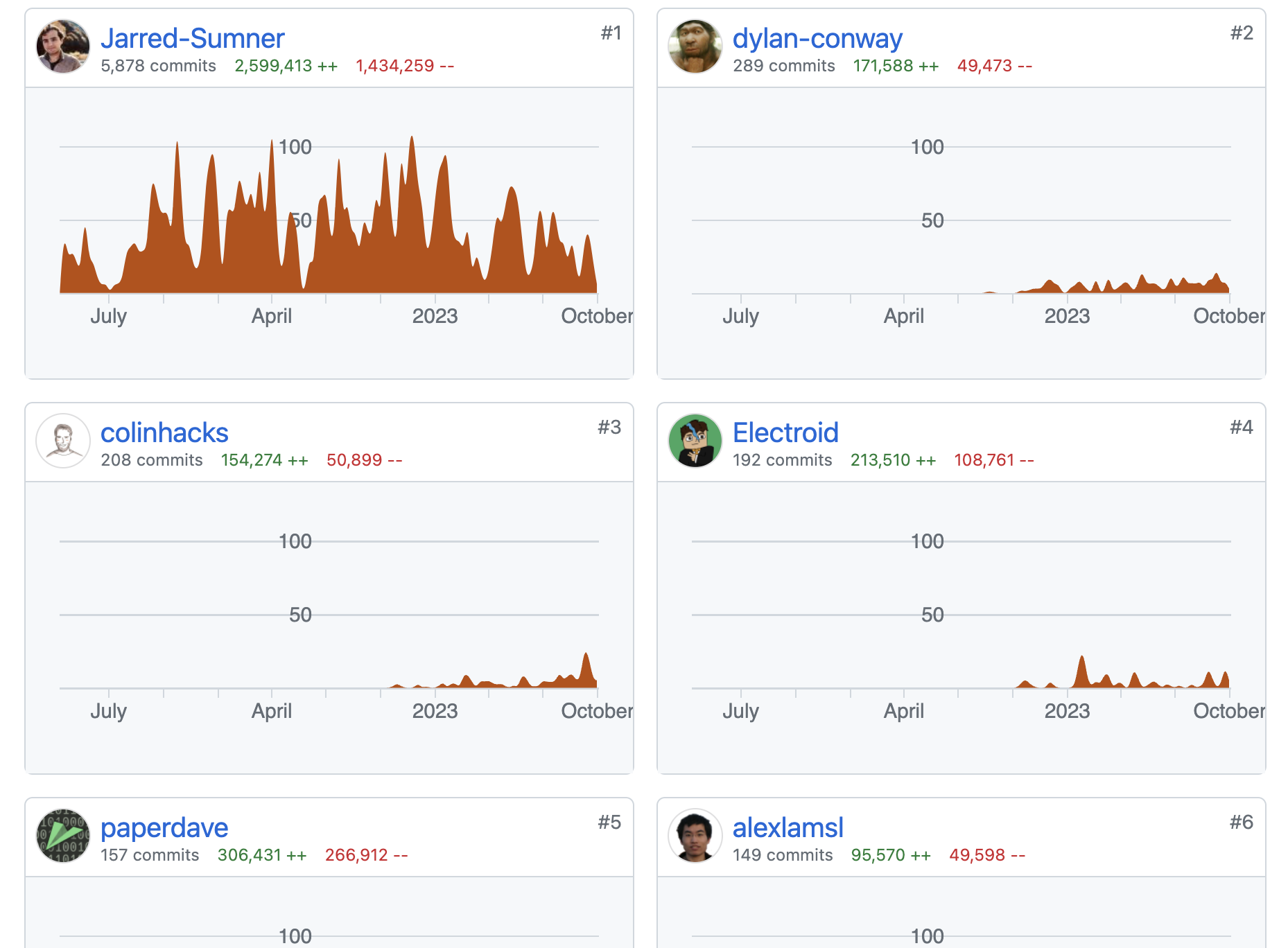

While the creator of Node.js is actively promoting his new platform, Deno, Jarred Sumner spotted a bunch of issues with Node.js and decided to develop a new runtime environment that could revolutionize server-side JavaScript (JS) development: Bun.

As an alternative server-side option, Bun JavaScript runtime has been around for over a year. Over this period, the developer community and enthusiasts have rigorously tested this environment, provided their feedback, and engaged directly in the development process. In September 2023, the world saw the official release of Javascript Bun 1.0, which is production-ready according to its creators. Bun has often been presented as a drop-in replacement for Node.js, implying that you can transition from Node.js to Bun with just a few clicks and commands. In addition, Bun offers several advantages over Node.js, including:

- Built-in TypeScript support

- Built-in bundler

- Built-in test runner

- Native Node.js API support

- Improved performance

How does it operate?

Bun runtime was created with a dual purpose in mind: on one hand, to simplify development on Node.js, and on the other hand, to enhance performance whenever possible. This was achieved by writing Bun in the low-level Zig programming language, which enables a high level of optimization. Additionally, Bun utilizes the JavaScriptCore Engine instead of V8, the engine used in the Safari browser, which delivers superior performance compared to V8.

Since Node.js didn’t provide a transpiler, a bundler, support for Typescript, and other essential features out-of-the-box, it resulted in the necessity of installing and maintaining a lot of third-party libraries and tools just to run or build a project. Bun solves these problems by offering many of these things right out-of-the-box.

In the image below, you’ll find a side-to-side comparison of Node.js and Bun: Figure 1. Node.js vs. Bun.js

Figure 1. Node.js vs. Bun.js

For example, Bun allows you to run files with .js, .jsx, .ts, and .tsx extensions immediately after installation due to its built-in transpiler. No extra libraries or tools need to be installed — it just works.

The same applies to support for the CommonJS and ES modules. Files with .cjs and .mjs extensions are supported right after Bun is installed. However, if you haven’t used them in your project, ‘require’ and ‘import’ will function without any additional settings.

This is just a small part of what Bun programming language streamlines and offers out-of-the-box, and I encourage you to visit its main website for more details.

Is Bun.js really that good?

As I mentioned above, the developers positioned Bun.js as a replacement for Node.js that doesn’t require any additional configuration or customization. Just install it and you’re all set.

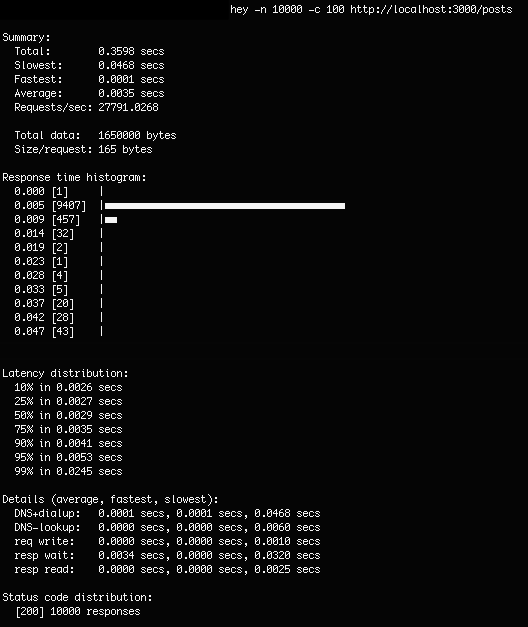

I decided to check whether everything is really that good on a small production-ready project on GitHub that uses Node.js, Express, and Mongo. Having forked this repository, I immediately installed Bun as described in the official documentation:

curl -fsSL <https://bun.sh/install> | bash

Next, in the corresponding folder with the project, I deleted node_modules and ran the command:

bun install

As a result, Bun installed all the necessary dependencies and generated its binary file, bun.lock, which is somewhat akin to yarn.lock or package.lock. The speed at which Bun installs all the dependencies is also worth noting. Indeed, this process is much faster than when working with npm. Such speed is a result of numerous optimizations and approaches to handling dependencies and caching. However, as a consequence, other developers may encounter various difficulties and unexpected bugs.

Right before running it, I corrected the command responsible for launching it in development mode:

"dev": "cross-env NODE_ENV=development bun src/index.js",

Then, I launched the application with this command:

bun start

Unfortunately, the expected start did not occur. The console displayed the following error:

error: Cannot find package "mongodb-extjson" from "/Users/…./node_modules/mongodb/lib/core/utils.js”

Not only did I encounter this error, but other developers also faced it here. As a result, Bun didn’t work not only with my current project, but also with projects written using the Nest.js framework. There are also problems with pm2+ Bun.

Figure 1. Node.js vs. Bun.js

Figure 1. Node.js vs. Bun.js

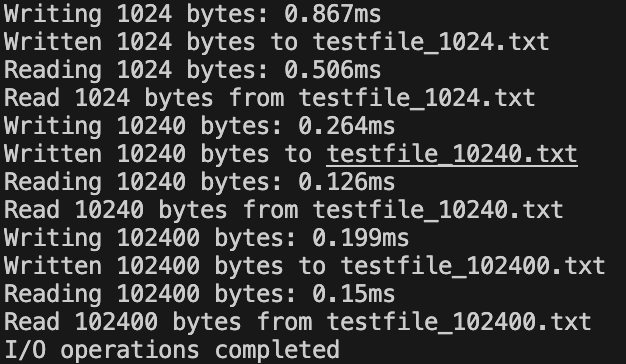

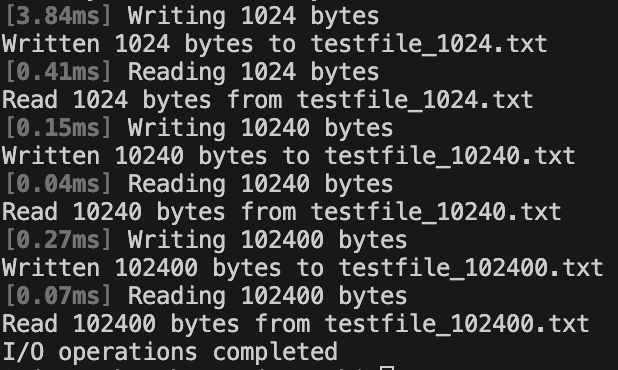

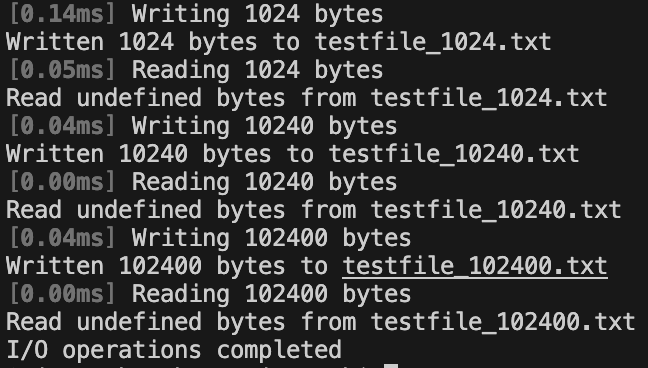

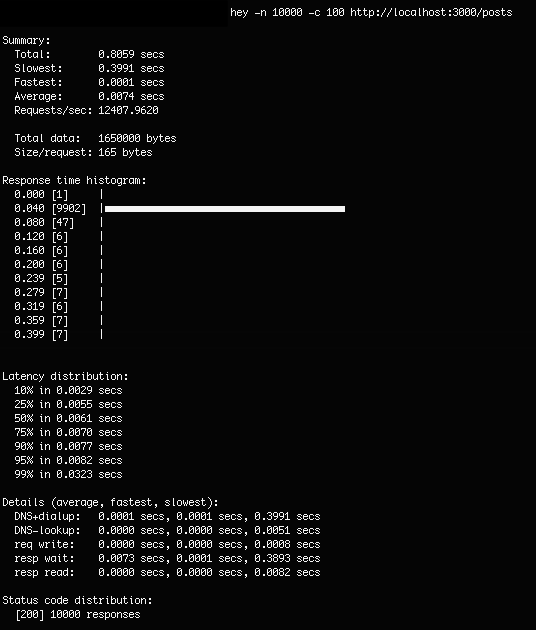

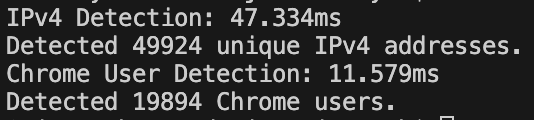

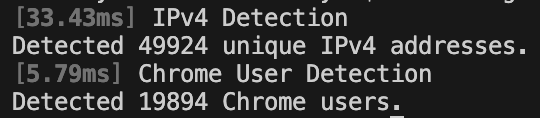

Figure 2.

Figure 2.