AI in banking: from data to revenue

Watch our free webinar, “AI in Banking: From Data to Revenue,” to explore how AI is transforming the BFSI industry.

Edge is an important technology which enables new experiences and business opportunities, so as to more effectively benefit from the total connectivity of billions of devices.

Let’s recap what edge computing is all about and what are the latest updates and trends in it, especially with how AI and edge are the perfect combination.

Manufacturing, retail, pharma, transportation, real estate (smart homes and buildings), digital cities and retail — they all demand efficiency and productivity improvements, and the main hope to achieve that is technology.

Defect-free factories with predictive maintenance, automatic defect detection, fast reactions to the events, and autonomous drones, cars, and planes are happening now.

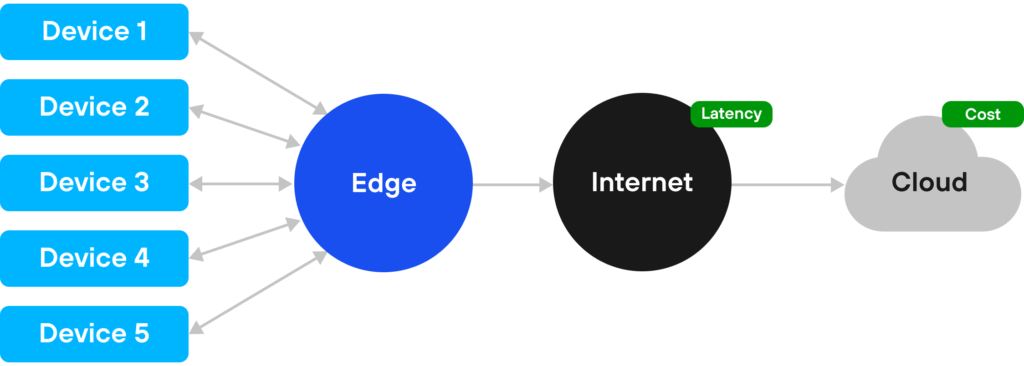

Cloud-like capabilities are moving to edge, transforming industries closer to the moment where data is analyzed faster without transmitting all the data to the cloud, and achieving a lower latency and cost.

Data is at the center of everything, and when there’s data there has to be AI, so the term Edge AI. Fusion of IoT, edge and AI has already been created and is being used more frequently.

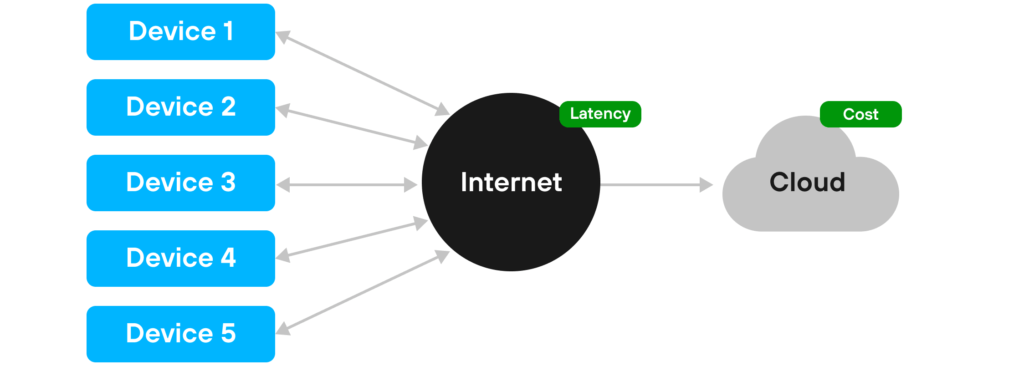

Picture #1: IoT with cloud Picture #2: IoT with Edge and Cloud

Picture #2: IoT with Edge and Cloud

Fusion of different data sources, like cameras, sensors, and lidars, generates huge amounts of data. Sending everything to the cloud as the center of aggregation for real time decisions and analytics is very expensive. However, cloud computing services are very convenient, so the idea of edge is to deliver infrastructure, data and AI services similar to the cloud but much closer to the source of data.

All the data processing cannot be done on the devices themselves. They have limited power and processing capabilities, so the local processing center is needed, and therefore the increasing demand for edge computing solutions.

Traditionally, data is sent to the cloud or an on-premise infrastructure, but now there’s a strong trend towards local processing and device coordination.

Edge is expected to have a similar architecture and hardware base as the cloud, so many cloud solutions are being adopted to run on edge.

→ Read about Evolutionary architectures in practice

Automatic updates, platforms, easy management, and cloud native stacks in the cloud are also present in edge.

Edge is therefore not just a local computer (one of the common misconceptions).

Latency in less than millisecond is hard to achieve with the cloud. There are multiple nodes (routers) which manage the traffic and it’s impossible to guarantee low latency with so many network devices controlled by different entities. But it can be easily achieved with edge.

Local connectivity is much harder to disrupt than a connection with a public cloud, as there are much less nodes, fibers, cables, and routers along the way from the data source (device) to the data processing node.

Data is not leaving an edge (camera imaging), making it much harder to violate privacy when the data is stored only in one location and often only temporarily.

Edge is not attempting to replace the public cloud but to work together with the public cloud. There are workloads better suited for edge and workloads better suited for the cloud. They will coexist for many years to come.

For instance, edge is better for controlling street lights in large cities that coordinate traffic and optimize the flow. Slow data for more analysis can be transmitted and archived in the cloud to train more complex models and improve their efficiency.

5G

5GThis means much more than fast speeds, it means first of all, thousands of more devices in the same area, generating tons of data to be processed and analyzed in real time. 5G is definitely the accelerator of edge computing adoption.

Running machine learning (ML) processing close to devices enables faster object detection and reactions, as well as better predictions delivered quicker to the devices. So the term Edge AI was created, which means ML algorithms running on the edge infrastructure including edge servers and the devices themselves. Some parts of it are done directly on the devices (for instance movement detection in cameras), while a lot of it is offloaded to close edge computing nodes.

More and more algorithms and data are open sourced. Developers start with an existing, openly available and free solution similar to the problem they are trying to solve. The trend is that more data, pretrained models and code will be publicly available for the development community to benefit from. It will enable us to create AI solutions faster and benefit from the lessons learned in the past.

The entire AI ecosystems will emerge with adequate data, models and code to be used again as a foundation for building new solutions and modernizing the existing ones.

Currently lots of edge software is closed source, proprietary software, and bound to edge devices. Brian McCarson, from Intel, compared it to the old Blackberry phones; locked down ecosystems and user experiences.

The technology community really wants the edge device software to be unlocked and opened to enable the whole community to innovate and iterate, however that is not on the horizon yet and the pace of change is not meeting expectations.

The latest achievements in the production of software and DevOps, known on the public and hybrid clouds, is still not as popular in the edge world. It is expected to see dominance of containers and Kubernetes, and microservices architectures as well in this area. The same management tools, updates, and security patches on edge will be present in the future.

→Explore more Is the hybrid cloud here to stay forever?

In a single factory there can be hundreds of devices programmed using tens of different languages and running in different environments.

The industry expects simplification, thus a reduction in the number of options, enabling more Developers to enter the IoT area faster and easier.

There are too many communication protocols. A standardized way that is more simplified is also expected here, for the same reasons.

How long will it take? According to the analysts it may take 20+ years, because of all the legacy hardware and software, but it has already started and is accelerating.

AI models are a valuable source for business advantage and lots of effort and money was spent on training them (tens or hundreds of thousands of EUR/USD).

Devices can be physically intercepted by hackers and attempts will be made to decrypt and steal both the models and data.

Devices can also be attacked by poisoned models and data, which are slightly modified models and/or data which can cause serious damage to property or risk safety.

It’s the most commonly recognized weakness of current IoT AI deployments, but it’s improving fast.

Traditional time series analysis proved its value and will continue to grow. Some experts claim that its golden era is over, but the growth in workloads spent on time series analysis proves otherwise.

The fastest growth in AI is related to vision, such as object recognition, defect detection, and security, in particular. The recent popular solution is face recognition combined with thermal images of people to help decide if they can be allowed to enter the offices, hospitals and airports.

The incremental improvements in hardware accumulate over time with better hardware sensors for imaging. Better performance of tiny processors will enable new applications of edge technology which is not even fathomable today.

A much faster progress is expected to continue in the software part of edge and AI, and optimized algorithms and models will accelerate faster than hardware evolutions.

The media focuses often on autonomous cars, however fully autonomous cars seem to be . . well, postponed (implicitly), and all the major tech players are focused on other areas in order to show businesses the usefulness of edge AI.

We’ve seen this in recent years. AI is becoming a more and more ubiquitous aspect of all IT related activities, not a separate domain, but a regular part of almost every software solution.

→ Explore Chatting about the future of AI with . . . the GPT-3 driven AI

Traditional models, once trained, are deployed and executed. An even higher requirement for accuracy demands a more flexible approach, in which models will adjust themselves on the go based on the influx of real world data. The optimal solution is to train large models in the cloud, deploy them to edge, and add smaller simpler just in time models to edge.

Machine learning systems benefit from large datasets of photographics data publicly available or bought from companies that specialize in data gathering.

The successes of this approach recently hit the wall of what is possible.

Why? First of all, data privacy and compliance make it harder to collect data. People are more sensitive to what is happening with their data and data collection has become much harder than it used to be.

The second is the biased data, including the lack of data (an example is the images of street crossings in Europe, with different types of trees, cars, people’s faces, and animals).

The third is economical reasons. Obtaining real world data is time consuming and very expensive, and data labelling is even more expensive.

So what helps with that? We have observed an increase in the popularity of synthetic datasets. What does it mean? It means data generated by the computers to train the neural networks.

Computers can generate realistic looking 3D images and animations with multiple light scenarios, weather simulations, and times of day/night, thousands of times faster and easier than it would take to obtain such data from the real world. Virtual cameras can be set in any location with any angles and view areas.

Because we know which pixel from a 2D image belongs to which 3D element of the picture, the accuracy is much higher compared to any training based on real data.

The experienced cost reduction per image is in the tens of thousands times over.

Touch screens in public areas and buttons in the elevators all proved to be threats to users because of COVID-19, and for technology’s evolution it’s an important accelerator to move faster towards a contactless future.

What does it mean from the user experience perspective? It means voice interaction, gestures interaction, real time image recognition, and responding to emotions (facial expressions).

For instance opening doors with a voice and/or face image, opening boxes with QR codes displayed on a personal smartphone or smartwatch, proximity devices instead of buttons, etc.

Touch will soon be in the past.

Most of the AI models are running in local data centers and some in the cloud. The movement to edge AI has just started and is certainly going to shape the near future.

Edge is not a replacement for the cloud in typical business applications with centralized data processing, but for real time and for device applications it will progress even faster than today. The technologies are not as mature as those for the public cloud but it’s changing and gaining ground at lightning speed.

The demands of always connected modern ubiquitous computing require fast advancements in edge computing and AI, and we at Avenga are super excited to be an active part of this revolution.

* US and Canada, exceptions apply

Ready to innovate your business?

We are! Let’s kick-off our journey to success!