Avoid building automation that has no upgrade path.

For some, it’s better to start with multiple small clusters and run them on different versions. While this approach seems difficult and might present certain challenges in terms of management, it will also minimize the blast radius.

Start small, think about the day two upgrades, and give yourself some time to devise a strategy for cluster convergence.

Storage

K8s is a perfect fit for stateless servers and applications that might or might not require scaling. In other cases, figuring out storage concerns will be hard.

One of the most viable options here is using ephemeral machines.

Docker images tend to accumulate quickly, so you’ll need to ensure you don’t run out of storage infrastructure unexpectedly. This can be achieved by keeping up a nice machine rotation hygiene, as well as expanding and contracting the cluster. Also, try attaching remote storage to your containers using stateful sets.

Remember that your networking infrastructure has to be built in a way that helps isolate storage traffic. You must make sure it won’t bog down when you attempt to carry out multiple storage operations at once.

OS and host provisioning

Since Docker and Containers are new, expect to be using the latest operating systems (kernels, drivers, etc.) when working with them. Unlike VMs, containers do not have the proper isolation, so it’s crucial that you build, maintain, and patch machines properly from the get-go and then keep monitoring the entire system closely.

Likewise, expect to be doing a lot of reprovisioning. K8s allow you to shut down and rotate machines through the cluster, so take advantage of that and make it a regular failure management practice. It would be wise to assume that your K8s cluster won’t be static and that you’ll just have to keep running machines in an upgrade pattern through the system.

If you’re using a distro, you’ll need to consult its requirements.

Additional tips

- Use at least three servers for your K8s cluster to balance the workload and internal services.

- Make sure that the master components run separately from the containers.

- Run the kubeadm installation tool on the master.

- Set up three or more master nodes to ensure cluster availability and resilience.

- Allot three to seven 2GB RAM 8GB SSD nodes to Quorum; this is needed for recoverability.

- Only work with experts who are well-versed in troubleshooting and setting up load balancing. You don’t want to take any risks here.

In conclusion

Using the cloud is clearly the easier choice when it comes to Kubernetes, but that doesn’t mean on-prem deployments aren’t worth the effort. If your organization is bound by security guidelines or has some other reasons to control the stack fully, K8s can still bring all the benefits of the cloud-native infrastructure if you run it on-prem. And, it doesn’t matter whether you use Openstack, VMware, or bare metal.

However, if you decide to use on-prem, you should know that Kubernetes alone, stripped of all the cloud services, won’t be able to support your application. You’ll need a plan on how to manage access control, load balancing, ingress, networking, provisioning, storage, and all the other vital infrastructure elements.

Start small, use VMs to run your clusters, and expect that getting the infrastructure to run smoothly will probably take some time. Additionally, plan for integration and assume you’ll have to do lots of operations work around your cluster.

Remember that Kubernetes isn’t a hammer that solves any problem. It’s not even that mature a technology yet, so be sure to use it the way it’s meant to be used, and you need some help with that, do not hesitate to contact us!

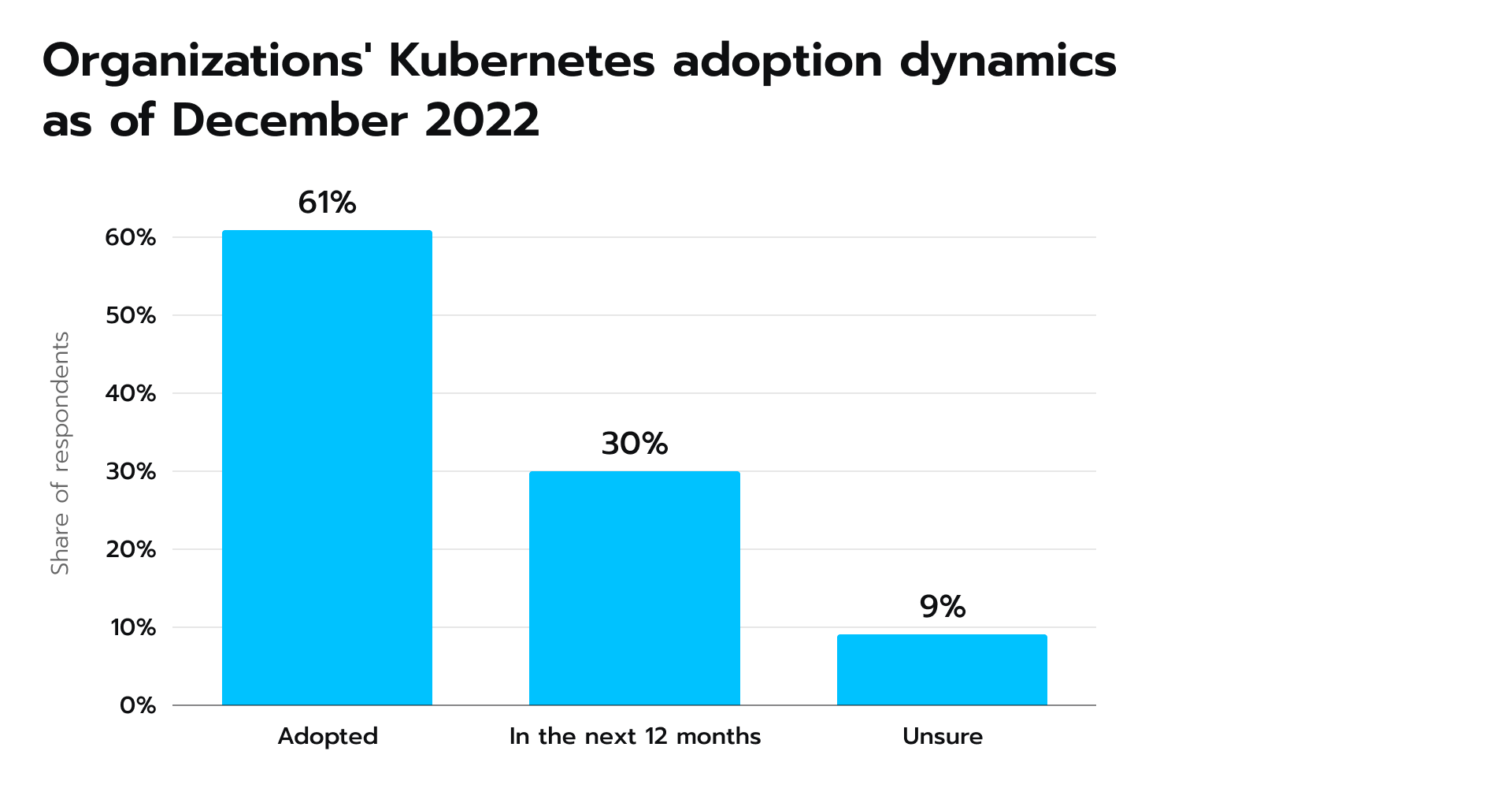

Figure 1.

Figure 1.

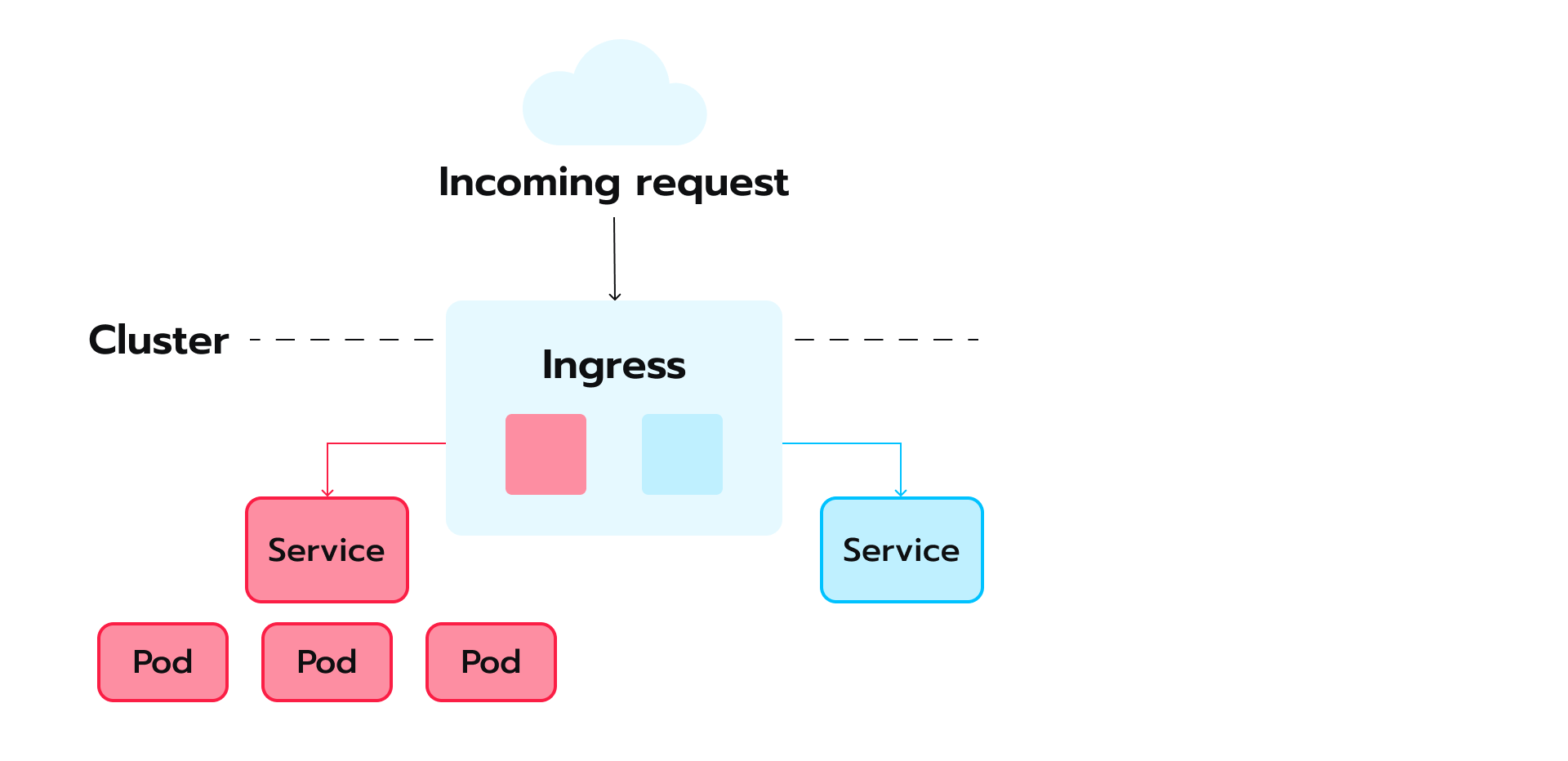

Figure 3.

Figure 3.