→ Migrating data is not simply transferring information from one storage to another. It is the complex work of a QA team and it requires skill, expertise, tools, and resources.

So, it’s time to go through the customary steps that should be taken during Data Validation testing within the migration process:

1. Test planning and control

Test Planning is the most important activity in any test project. This step presupposes the following actions:

- Identify and agree on data migration requirements, identify the whole list of data cleaning requirements and understand all the dependencies and interactions with other systems.

- Define the scope. It’s necessary to pinpoint and describe all the things that need to be tested (data to be migrated, migration rules, data quality assessment).

- Define migration approach. Select techniques, determine test items and coverage. Decide on single migration (big bang), phased or incremental approach.

- Define risks ( business objectives, product, project and technical risks).

- Determine the size of the test efforts. It’s very important not to underestimate the required efforts for testing. It should be pointed out when and who is to be involved in testing, set up all the needed supportive hardware and software.

- Define the test completion criteria.

- Meticulous monitoring and control.

2. Test analysis and design

During Test Analysis activity, QA will create TCs with emphasis on how each item of data is mapped and migrated from source to target. It will also include a design of how the migration is validated through testing and define non-functional aspects to test.

A. Review test basis, analyze test items, specification and identify test conditions

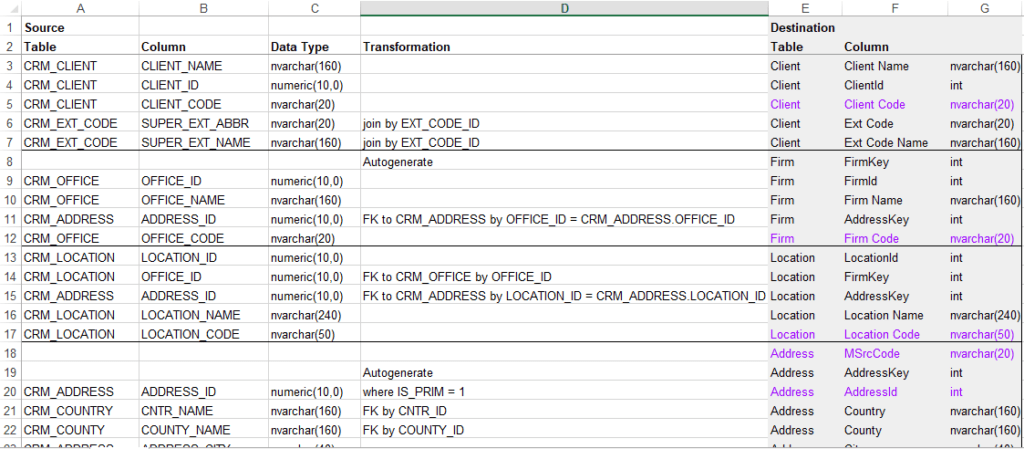

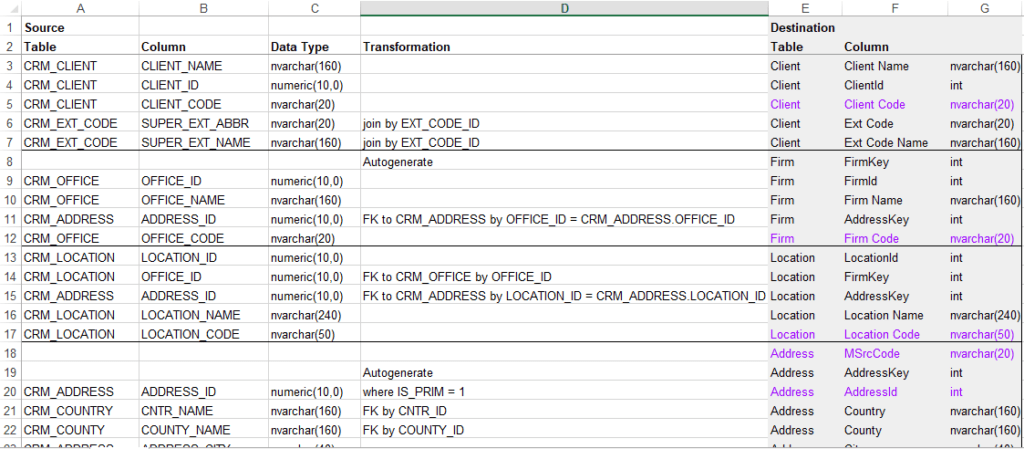

Review documentation and check the mapping of each data type in the legacy system and the new system. Make a note that during the migration, based on the transformation rules, some columns may be combined into one column; some column names, tables may change names or tables.

Then, according to the documentation, check the DB scheme to ensure that it includes mandatory fields, field names, field types, data types, etc., for both, the original data source and the destination system and find any mistakes or error areas and correct as much as possible.

A good mapping document is supposed to represent the following data from both source and target fields:

- Table name

- Field name

- Data types and length

- Transformation flow: how source tables and files should be joined in the new target data set, transformation logic, and/or business rules

Take into account if any fields that are required in the new application are not required in the old one and make sure that the value of these fields are not equal to NULL.

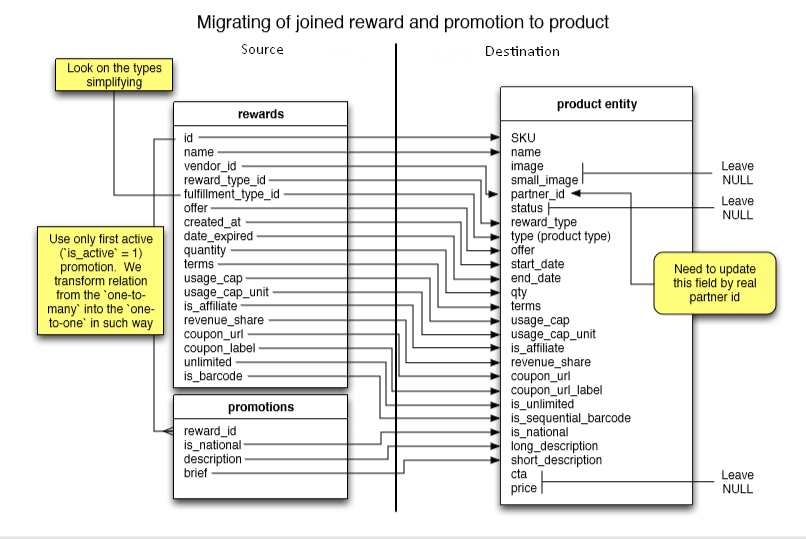

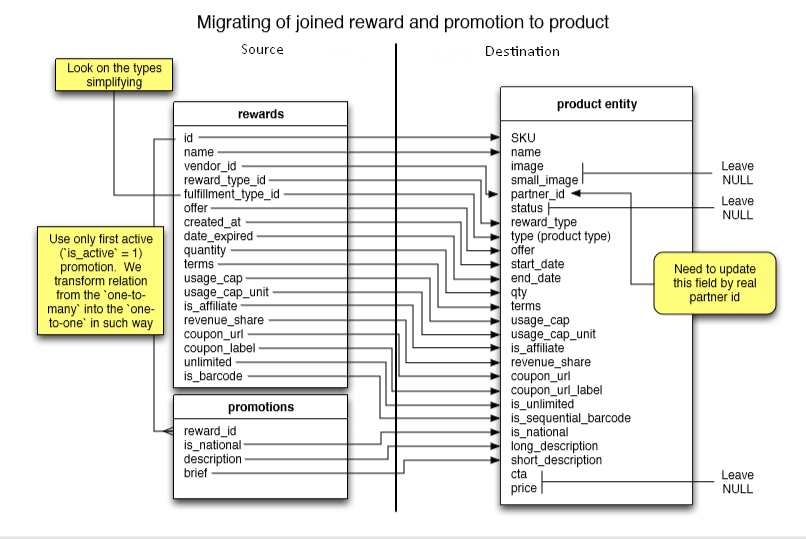

Mapping is a useful instrument for data loss prevention in case if you need to deploy tables or columns that have been renamed. It (mapping with business rules) can be represented in different ways, for example: in a simple table (excel) or in a graphical view.

Fig 1. Mapping table example

Fig 1. Mapping table example

Fig2. Mapping in graphical view example

Fig2. Mapping in graphical view example

B. Create TCs and a set of SQL queries to validate the data before and after migration

The following key points should be taken into account:

Data Completeness

Data Completeness includes :

Record count verification.

Record count checks consist of 2 scenarios:

- Record count for inserted Records. Use a requirement document so that you can identify what the expected result is.

- Record count for updated recor£ds. The number of records which are updated should match the record count in the source table (also using requirement document).

In order to get a number of a record in a target or source table we can use a simple query

Data Quality

Data Quality ensures that the data is correctly loaded into the destination tables/fields, and also that the application rejects, substitutes default values, alters, ignores, and reports invalid data correctly .

Comparing source and target data sets. For example, in order to compare data in source DB with destination DB two types of queries can be created:

- Query to extract data from the source database. For example:

- Query to extract data from the destination database. For example:

Verification Data validation. It includes the following:

- Data validation for updated records.

- Data validation for inserted records.

- Duplicate records check. Can be done using the next query:

Verification of the deleted records. Based on the requirements, some data can be deleted.

Verification of Distinct Values. For example, a requirements document specified that a column in the target table should have distinct columns. Then, the next query can be used:

SELECT DISTINCT <Column Name> FROM <Table Name>.

Verification of many source rows into one target row. A Requirements Document can specify that some rows should be combined into one. An example of the query:

Inspection of correct handling of invalid data types in the fields.

Inspection of the correct handling of the NULL values.

Data transformation and data integrity

- Check if the data transformation works according to the requirements and business rules.

- Check if new tables are created and if new columns are created with the proper data types (as specified in the design).

- Check the referential integrity between tables.

C. Design the test environment and identify test and support tools

→ Learn about data validation testing techniques and approaches to help you ensure the data you deal with is correct and complete.

3. Execute test cases

Ones migration has been performed, QA should start running TCs that are created during the design phase and compare results. How do you compare huge data files? There are a lot of free or commercial tools for that. Data can be compared in SQL Data Compare or Red Gate. Also, the exported files can be compared with ‘Total Commander’ or Excel.

Data validation will make sure that data migration was done, meeting all business rules and mapping, but it does not necessarily mean that in the destination environment everything works well. So, the next steps are really important. That is, we should perform:

- Non-functional testing, which includes the following:

- Security testing in order to make sure that no security vulnerabilities were opened up.

- Performance testing like load and stress tests to ensure system stability.

- Functional Application testing. Running the critical business processes in the destination environment is more than important, it’s vital. It often happens that there are still some issues that need to be resolved before the migration process is completed.

4. Evaluating exit criteria and reporting

This phase presupposes checking the following:

- Whether determined exit criteria are met.

- Determination if more tests are needed.

- Summary of the test results and report creation.

The most widespread issues during data migration testing

You know that testing is a live procedure. So any professional might come across the whole range of issues. Here, I’ll touch upon the most common ones. Those include:

- Process.

- Underestimated scope.

- Resources proficiency: hard and soft skills, experience, etc.

- Data inconsistency.

- Loss of Data.

Conclusion

As it’s more and more about data and its quality nowadays, businesses need to drive maximum value utilizing it. Migration of data is not just the process of physically moving data from one storage to another. When data is extracted from one source, transformed, and finally loaded into the destination place, it is the best time to check its quality. Data migration testing is a viable instrument which capacitates organizations get the best insights from their data, but it requires specialized skills, expertise, tools, and resources.

→ Explore Avenga’s quality management across various domains

Fig 1. Mapping table example

Fig 1. Mapping table example Fig2. Mapping in graphical view example

Fig2. Mapping in graphical view example