Services and solutions

Avenga in numbers

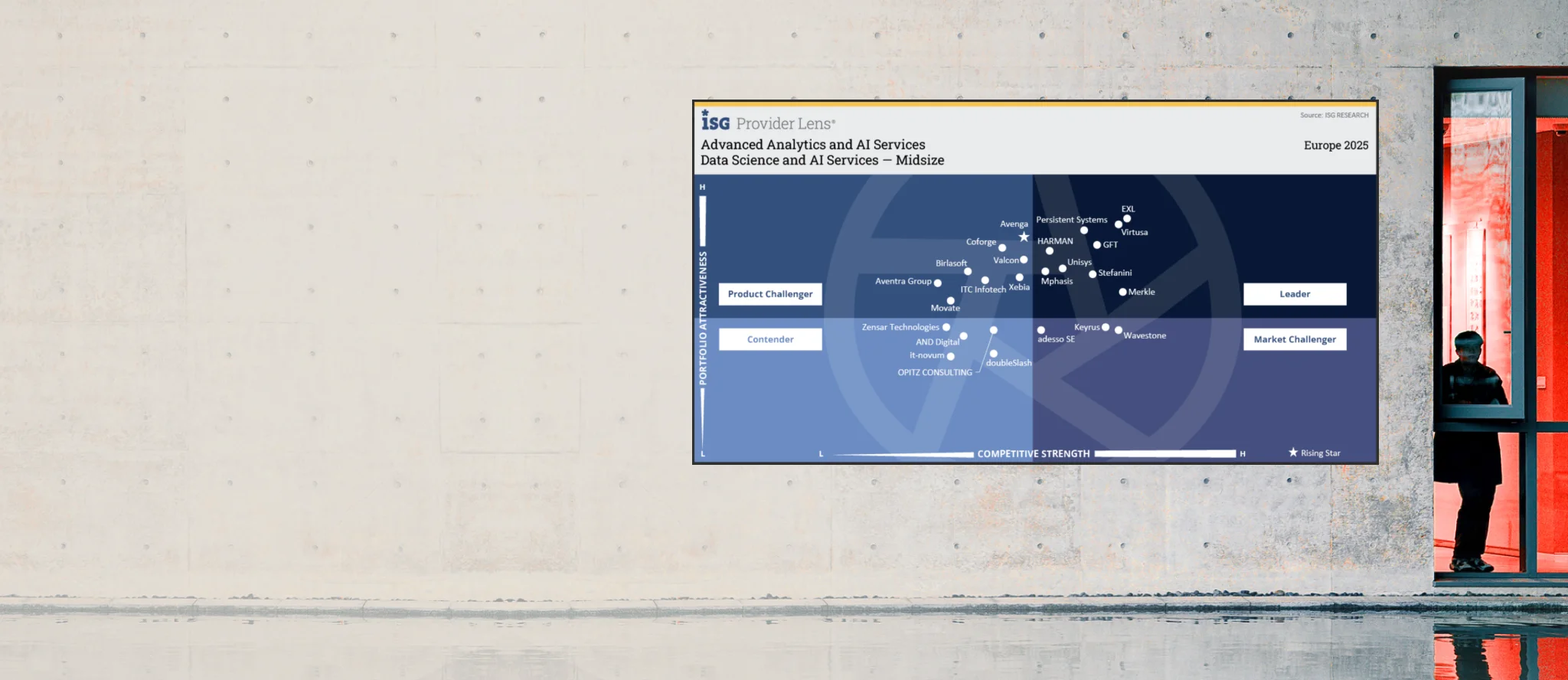

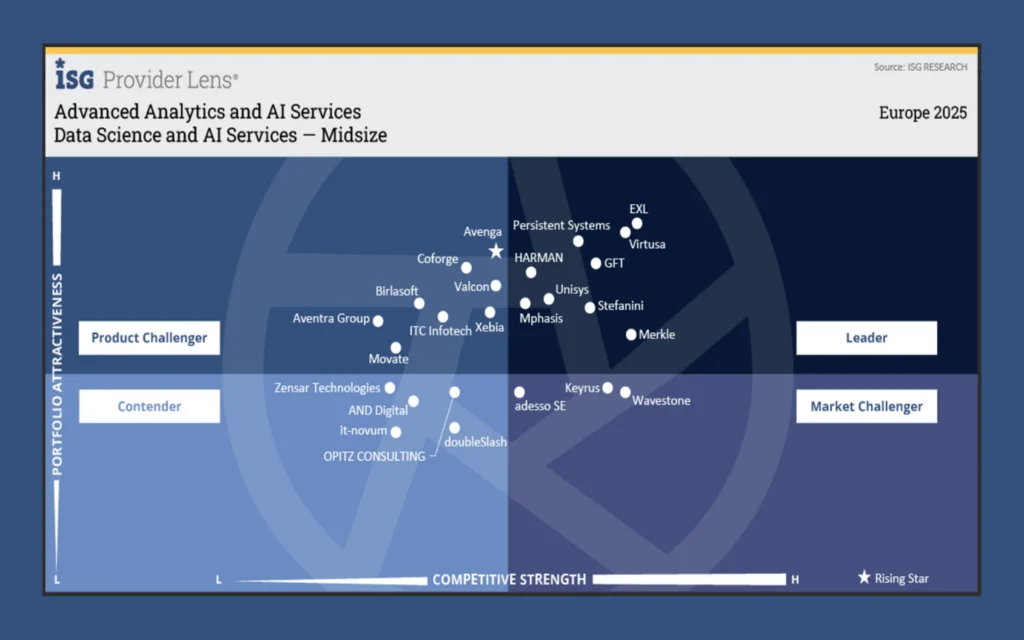

Avenga has been named Europe’s Rising Star for its data and AI capabilities that redefine the market’s perception of customer-centricity in AI development services.

Industries we drive growth in

-

Automotive

Through advanced digital platforms and intelligent systems, we help automotive leaders innovate faster — modernizing engineering, production, and mobility services for safer, cleaner, more adaptive transportation.

Learn more

-

Banking and Financial Services

Empowering financial institutions to reinvent products, operations, and customer experiences, we bring secure digital innovation supported by trusted data intelligence and AI-enhanced decision-making.

Learn more

-

Energy and Utilities

The modernization of energy ecosystems is driven by our innovative digital solutions, enabling resilient infrastructure, smarter resource management, and sustainable growth.

Learn more

-

Life Sciences

By unifying digital platforms, automation, and intelligent insights, we accelerate scientific and commercial innovation, helping organizations bring therapies to market faster and improve patient outcomes.

Learn more

-

Manufacturing

Manufacturers gain sharper quality, agility, and efficiency through our innovation-led approach — powered by connected systems, automation, and predictive intelligence for smarter production.

Learn more

-

Media

Digital platforms that enhance creation, distribution, and audience engagement allow media organizations to innovate at scale, delivering personalized and dynamic experiences.

Learn more

-

iGaming

Innovation within iGaming accelerates through our flexible, secure, and data-driven ecosystems that boost player experience, strengthen integrity, and enable rapid global expansion.

Learn more

-

Mobility

Mobility providers transform operations and user experiences with innovative platforms that connect services, optimize performance, and leverage real-time insights for seamless movement.

Learn more

-

Retail and Consumer

Commerce is reimagined through intelligent, innovative platforms that modernize supply chains, elevate customer relevance, and create frictionless shopping experiences.

Learn more

-

Telecommunications

Telecom operators enhance reliability and scale through our innovation-focused digital architectures, automation, and intelligent optimization — enabling more personalized and resilient connectivity.

Learn more

Proven return on technology investment with Avenga

Elevating logistics risk management with intelligent automation

Slashing review cycles by 86% with automated fraud detection

Sophisticated pharma patient engagement platform

At the heart of everything we do is a passion for solving real-world challenges through technology. Join us and shape the future of industries with us.