How to choose the best cloud platform for AI

Explore a strategy that shows you how to choose a cloud platform for your AI goals. Use Avenga’s Cloud Companion to speed up your decision-making.

The concept of remote procedure calls (RPC) is very old, but is always key for building distributed systems and will continue to be so.

RPC is when we call a function and the function does not execute in our process, but remotely on a different machine (or different process on the local machine).

This isn’t a software history article, but we have to mention Corba, Java RMI, and dotNET remoting, but having done so, let’s quickly move on to SOAP.

Simple Object Access Protocol (SOAP) dominated the early 2000’s and delivered on the promise of an API contract independent of the programming language and transmission protocol. SOAP was well supported by all major programming languages and is still in use today. Especially in the scenarios when standardized end-to-end message security is required; like communication with the tax office, there are many B2B exchanges.

Huge XML payloads of the early 2000’s marked this era in the software’s history.

However, it was not very well liked by developers, because of all the contracts, heavy XML payloads, parsing, and the hell of XSLT (XML transformation language).

There were benefits such as cross platform compatibility and explicit contract definition, but the development community was in search for something simpler.

This idea was to forget the other protocols and just send the requests and responses over the http protocol using different http verbs (GET / POST / PUT) to perform different operations.

Of course the idea of REST was totally different. It was not even supposed to be a RPC, but many other technologies became RPCs, and eventually so did REST.

There were no standards really, as they were more like conventions. For instance, you would use GET to read operations, while POST and PUT to ‘write/update’ operations, etc. Most developers followed along, but in reality the workflow required you to try and call the API to see if it’s working, how it is working, and how it is behaving when something goes wrong with the call arguments or server side error.

JSON was selected because it was very easy to be parsed by Javascript client (browser), initially even with a simple .eval function (security nightmare at the time).

Support for all the development platforms arrived quickly and today it’s almost invisible, as we just create server APIs and clients consume them without any problems.

It works very well, almost magically, when the client and server are created using the same toolkit; but it is not that great when two different technologies are used, however it is still acceptable.

JSON was a huge step back in consistency and standardization, but an even greater leap toward simplicity for the majority of developers. The tools and IDEs created a very pleasant developer experience, which we all liked.

New standards and de facto standards appeared (Open API). Tools like Swagger enabled developers to enjoy more predictability and structure, bringing back a little bit of what was lost after abandoning SOAP.

For classical clients (browser, mobile) and servers (http, REST), with JSON as the payload, it all worked really well, so why even bother with anything else?

Everybody uses REST/JSON and it’s working for us. For many it’s hard to even imagine doing it any other way or even imagining a reason to do so.

https://grpc.io/docs/quickstart/

The goal of gRPC was to combine the benefits of stricter API definitions with smaller binary payloads, without giving up the compatibility benefits of multiple languages and technology platforms.

The scenario that created the need for a better modern RPC was the complex API infrastructure. With its billions of containers running even more services and communicating in simple and complex scenarios, it needed to do it all effectively.

We are talking about Google scale.

Another important factor was the rise in popularity of HTTP/2, because the classical REST/JSON was still focused on the old HTTP 1.1 connectionless stateless nature.

Plus, when http was almost gone, it was replaced with https (with -s) using TLS.

The cost of opening and closing connections then parsing and decoding JSON messages, when multiplied by millions becomes something that needs to be addressed.

So Google created gRPC in 2015.

service HelloService {

rpc SayHello (HelloRequest) returns (HelloResponse);

}

message HelloRequest {

string greeting = 1;

}

message HelloResponse {

string reply = 1;

}

Ok, but we’re not Google, Facebook, Amazon, or Microsoft, besides isn’t it just the problem of scale that they face by having billions of users? Does it apply to us at all?

Strict definition of contracts

gRPC can transmit different kinds of messages, but the default is Protocol Buffers 3. Example:

// The greeter service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}

Why?

Because fortunately, many errors will be detected in the early stages, and not later by calling and wondering if it’s working or not; type control at least exists here.

Protocol Buffers 3 defines clear rules for types, support for backward compatibility, optional/required fields, and strict data types.

With encoded binary in a very efficient machine friendly way, it requires a very little amount of effort to serialize/deserialize from/to a C++/go/java/dotnet based service.

Canonical JSON mapping: A JSON equivalent can be created, for instance, for humans to read it, or to create a REST/JSON interface for newer gRPC services.

Changes to APIs included built-in support for versioning (for instance adding a new field for an API definition won’t break things and does not require you to re-deploy everything).

gRPC is, first of all, an effective messages exchange technology.

Multiple https calls require multiple connections in REST, and here they benefit from HTTP2, so the problem is solved. gRPC can maintain a constant connection, bidirectional message exchanges and streaming over https/2 secure channels. The latency is much lower and the overall throughput is much higher.

How much faster? Based on the results of benchmarks, it’s from ten to a hundred times faster than REST/JSON, especially when there are thousands of small API requests per second or between different microservices. In terms of CPU usage it has been lowered by 3 to 10 times.

JSON encoding/decoding can consume 20%-40% of fast APIs compared to the actual API work. In the case of gRPC it lowers it down to 5%-10%.

From another perspective, you can serve more clients using the same hardware and network infrastructure than before.

There are new API design options because of the streaming and bidirectional nature of gRPC. It was recommended that we don’t create too fine a contract and to not assume two way communication, so as to make the connection, send requests and wait for the response. Now it’s more like opening a dialog channel. Streaming data back and forth is much easier with gRPC while so unnatural in REST.

There are multiple different platforms and languages; there’s no going back to remote.

Supported languages: C++, Java, ObjectiveC, C#, PHP, Python, NodeJS, Go, Android, Ruby, and others.

Authentication can be done per call (of course) but also per connection, which is a very good option to have as well, because repeated authentication is a performance cost.

Context can be preserved between calls or kept during connections, like CallID, authentication tokens, or propagated automatically (key, value). The ability is built-in to the protocol and is available for all the servers and clients.

Context can be cancelled or an expiration date set.

Tracing, logging and monitoring can easily be plugged-in to protocol.

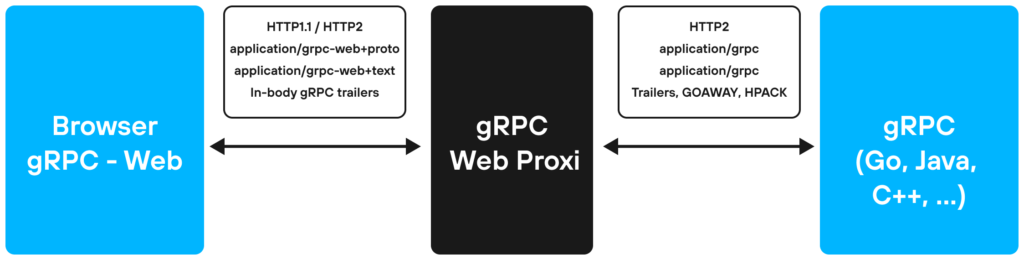

This is one of the key questions that needed to be addressed. There’s no easy path like with JSON. There is gRPC Web that uses a translation layer to work with JavaScript in the browser. So it is there, and it is working fine.

https://grpc.io/blog/state-of-grpc-web/

Also there’s development support with the extension called gRPC-Web Developer Tools.

https://chrome.google.com/webstore/detail/grpc-web-developer-tools/ddamlpimmiapbcopeoifjfmoabdbfbjj

There are multiple client applications which utilize the latest web technologies, but they are still waiting for better web features (streaming, bi-directional improvements) to make it closer to native.

https://github.com/johanbrandhorst/grpc-web-compatibility-test

Google strongly recommends their own client, which is used for Google Maps and GMail (as a reference application), and no one dares to deny these examples.

The key to using gRPC is to create .proto files to define the contracts. Those files are the definitions from which everything else is derived.

The simplest file for echo service that returns the argument looks like this:

message EchoRequest {

string message = 1;

}

message EchoResponse {

string message = 1;

}

service EchoService {

rpc Echo(EchoRequest) returns (EchoResponse);

}

It does not look too scary, except maybe the strange “= 1” parts. They are used to specify the field numbers for the binary encoder.

Types can be embedded and there are various data types:

message Person {

required string name = 1;

required int32 id = 2;

optional string email = 3;

enum PhoneType {

MOBILE = 0;

HOME = 1;

WORK = 2;

}

message PhoneNumber {

required string number = 1;

optional PhoneType type = 2 [default = HOME];

}

repeated PhoneNumber phone = 4;

}

The next step is to prepare the environment for your language of choice.

Then you generate the client code.

Of course this can be part of the continuous integration pipeline you already have.

Noticeably creating .proto files is the additional step that enables everything. But from my discussions with others, it invokes bad memories and seems to be something that shouldn’t be needed. For older developers it’s recalling bad memories from old RPC technologies, for the younger generations it’s just another thing to learn that does not bring visible (from functional point of view) benefits fast.

It’s an explicit cost, kind of like an investment that will return in the near future.

It is not natural for front-end development, as browsers cannot natively parse ProtoBuf 3. There are readily available JS-ProtoBuffers, which are two-way converters but it’s another layer of communication and potential errors.

If the browser is the only consumer of your API and your API is very CRUD, like gRPC, it won’t be so clearly visible.

Being non human readable is not really an issue. How do many people read complex JSONs anyway? There are readers for gRPC that make it human readable for debugging.

There are no clear benefits when API calls are long and not frequent. It’s focused on large scale API infrastructures, with thousands of different services. On the other hand, count the number of microservices in your organization and you may be surprised to see it’s already in thousands of them and the benefits of gRPC can be easily seen there.

It’s also another tool in the toolset, making things even more complex. And even if not, many teams are overwhelmed with functional changes and are hard pressed not to ‘waste’ their time on technological tasks, especially when REST/JSON is working fine, and it’s usually working well.

For Google, it’s obvious that gRPC is their child. It’s supported 110% by Google, in its cloud infrastructure and in the Go language. So if you’re actively using the Google Cloud Platform or developing applications in Go, gRPC is a natural choice.

https://grpc.io/ for general gRPC information root

https://grpc.io/docs/tutorials/basic/go/ – how to do it in Go

Microsoft in its dotnetcore considers gRPC as a native citizen, so just run it.

dotnet new grpc -o GrpcGreeter

To create a simple hello world project, no additional downloads, plugins or hacking is required.

Microsoft also supports it within its Blazor technology. They use gRPC Web for keeping communication fast between the browser client and the Blazor server. It really proves Microsoft is no longer bound to its old NIH (not invented here) syndrome and is open to use the best available solution on the market.

And (no surprise here) there are plugins available for ProtoBuffers3 to create .proto files in Visual Studio Code.

https://docs.microsoft.com/en-us/aspnet/core/grpc/?view=aspnetcore-3.1

Update: Microsoft released official gRPC Web for .NET

https://devblogs.microsoft.com/aspnet/grpc-web-for-net-now-available/

Examples of Java gRPC projects reminded me a little bit of old Java remoting times, like stubs, etc. But it is a cross platform that works fine as there are starter projects for Spring Boot plus (of course) maven support.

Both servers and clients can be created normally using … Java.

https://grpc.io/docs/tutorials/basic/java/

There’s no doubt that one of the most popular languages in the world is supported.

python -m pip install grpcio

There are libraries that can create both servers and clients.

https://grpc.io/docs/tutorials/basic/python/

NodeJS is based on Javascript, so a binary based gRPC seems to not be a natural choice. Despite not being natural, it is well supported in nodejs.

There’s a proto loader library to enable communication between the nodejs application and the gRPC server. And you can also create a gRPC server as well.

https://grpc.io/docs/tutorials/basic/node/

There are multiple migration stories online of moving from REST/JSON to gRPC. It usually takes weeks, not months, to move and the benefits return quickly, including faster interaction with mobile clients, and a much better responsiveness of services for other services and end users.

There’s also a compatibility option for REST clients using proxies that perform bidirectional REST-gRPC translation, so the migration doesn’t have to happen in one shot and it can be done gradually.

The success stories repeat the benefits of gRPC: performance, much lower cost of maintenance of client libraries, API versioning, and predictability/reliability (strict contracts).

If it works, don’t fix it. This is a centuries old engineer’s mentality.

However, be careful with that attitude if you plan a long career in IT. Things break for various reasons, so “unlearn what you have learned” said Yoda, and “it’s a fashion business after all” many experienced IT people say.

gRPC is much more than a fashion or the result of boredom with the established REST/JSON API world. There are things stopping its general adoption, but its steadily increasing popularity has made me cross my fingers for this technology.

What I’d suggest to Google and other major players is to make it a native in-browser technology, that would speed up things significantly. Google is a major player in the Blink engine and could use that to provide better gRPC support in the open-source spirit, of course.

Let’s see if gRPC becomes the RPC choice for the 2020’s; a new decade is already here!

Do you use gRPC in production? What would you like to share from your practice?

Andrew Petryk, Java Engineering Manager

“We have tried gRPC several times, and every time we fell back to something different.

Last time I checked, RSocket was doing great in terms of performance. But hey, last week the official grpc-kotlin lib was released so I would give it a try.”

Frederik Berg, Team Lead Engineering Berlin

“gRPC in general has proved to be a lightweight and reliable solution for our RPC needs in our microservice oriented architecture.

Strong typing is a benefit in a complex setup and makes the code more concise.

For many cases it replaced overengineered and heavyweight solutions like RabbitMQ and has provided:

When we initially realized that most, if not all, of our RabbitMQ usage was RPCs, we were inspired by Spotify’s success story with gRPC.

Getting rid of the queue allowed us to reduce our k8s node sizes and save money, but the other effects were even more beneficial to the project.

We do have plans to test gRPC for frontend to backend communication as well but haven’t yet found the time to do so.

Using language independent serialization, in the form of protobuf, got us out of our selfmade NodeJS only prison, where we were kinda forced to use our self written de-/serialization wrappers and which allowed us to be open for micro services written in different languages again.

We’d like to see even better toolings and bindings for NodeJS and Typescript in the future.

We haven’t yet found a clean way to avoid having what felt like duplicate type definitions, without adding constant compiling of typescript to protobuf or vice versa into our workflow.”

* US and Canada, exceptions apply

Ready to innovate your business?

We are! Let’s kick-off our journey to success!